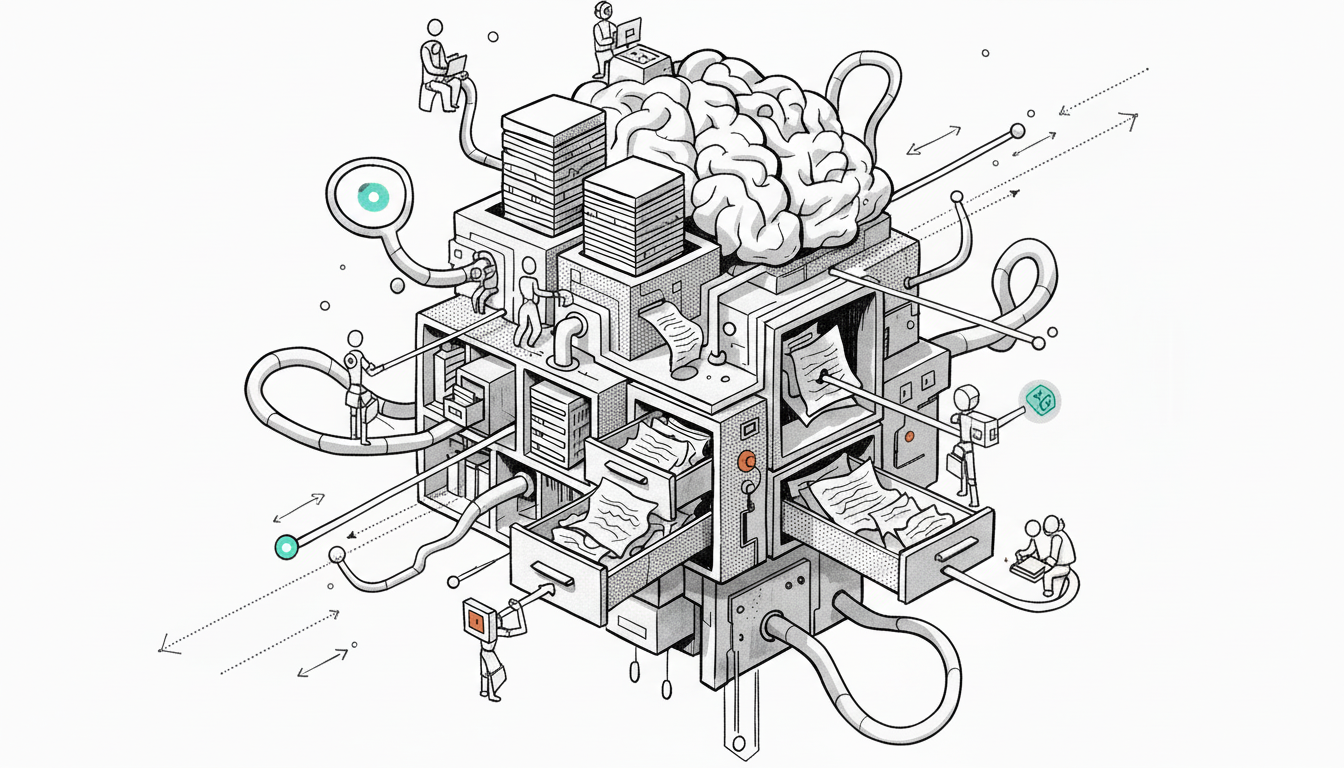

Why Local Memory Isn't Enough: The Case for Shared Agent Learning

Anthropic recently published a thoughtful piece on Effective harnesses for long-running agents, offering practical guidance on maintaining agent continuity across sessions through progress files, git commits, and feature checklists. The engineering is solid, and for a single agent working on a single project, the approach works well. But there's a fundamental limitation baked into the architecture: those learnings live in local files, which means they're siloed to that specific agent instance and disappear when the project ends.

The Limits of Local Memory

The pattern Anthropic describes treats each agent session as a continuation of previous sessions on the same project, with progress tracked in files like `claude-progress.txt`, test status maintained in JSON files, and git history providing additional context. When the agent wakes up for a new session, it reads these artifacts and picks up where it left off, which elegantly solves the continuity problem for long-running work.

What this approach doesn't solve is learning at scale. Consider what happens when you're running an agent across hundreds or thousands of executions in an enterprise environment. Each execution might succeed or fail in subtle ways, and maybe the agent generates technically correct output that violates your brand guidelines, or it handles an edge case in a way that works but frustrates users. In a local-file model, these insights exist only in the context of individual runs, with no systematic way to analyze patterns across executions or feed those learnings back into future behavior.

Local memory creates local knowledge, and at enterprise scale, that's simply not sufficient for building agents that consistently meet your quality bar.

Learning at Scale

At Wayfound, we've been building toward a different model for over eighteen months, one where an agent learns not just from its current session but from the accumulated experience of hundreds or thousands of its own executions. Every run gets captured, analyzed against the agent's defined role, goals, and guidelines, and evaluated with input from subject matter experts who understand the nuanced aspects of "what good looks like" for that specific agent.

The mechanism works through MCP integration: when an agent is about to execute a task, it can query Wayfound for relevant failure patterns, improvement suggestions, and quality guidelines drawn from the full history of that agent's behavior in production. Instead of each execution starting from scratch with only local context, every run benefits from the institutional memory built up across all previous executions.

This transforms how agents improve over time. Rather than relying on developers to manually analyze logs and update prompts, the system creates a continuous feedback loop where insights from past executions actively shape future behavior.

The Human Element

There's another dimension that local files fundamentally cannot capture: expert judgment about quality that resists automation. Some criteria simply can't be reduced to passing or failing tests, things like "this response is technically accurate but wrong for our audience" or "the reasoning is correct but the tone doesn't match our brand" or "this passes all checks but would confuse our users in practice."

These insights live in the heads of your subject matter experts, and in a local-file model, they tend to stay there or get manually encoded into prompts through painful trial and error. Wayfound provides a direct path for SMEs to inject their expertise into the system, where that knowledge becomes immediately accessible to the agent on every execution. Your institutional understanding of what quality means stops being tribal knowledge and starts being operational infrastructure that actively guides agent behavior.

From Continuity to Continuous Improvement

The Anthropic pattern is a useful contribution to the conversation around agent architecture, and it solves a real problem that anyone building long-running agents will encounter. Maintaining context across sessions matters, and their approach offers a clean way to achieve it.

But continuity is really just table stakes for production agents. The harder and more valuable problem is building agents that get smarter over time, learning from the full breadth of their executions and incorporating both automated analysis and human expertise into that learning process.

That's the system we've been building at Wayfound for over a year and a half: not just memory for individual sessions, but continuous improvement driven by comprehensive execution analysis and expert feedback. Local files are a reasonable starting point for agent development, but shared learning across executions is where production agents actually need to go.