Context Graphs in the Real World: Company Culture as Critical Context for AI Agents

Recent posts on the need for AI Agents to learn Organizational Context by Jamin Ball,Jaya Gupta & Ashu Garg, Aaron Levie and others have made us quite excited here at Wayfound. We first presented the need for a 'cultural context' as an effective buttress for AI agent alignment and ensuring AI agents function well within a specific organizational context in 2023. Now, this idea has finally emerged in January 2026 as the “Big New Idea” in AI.

The big challenge that Context Graphs aim to address is: getting the “context that lives…in people’s heads” to the AI Agents.

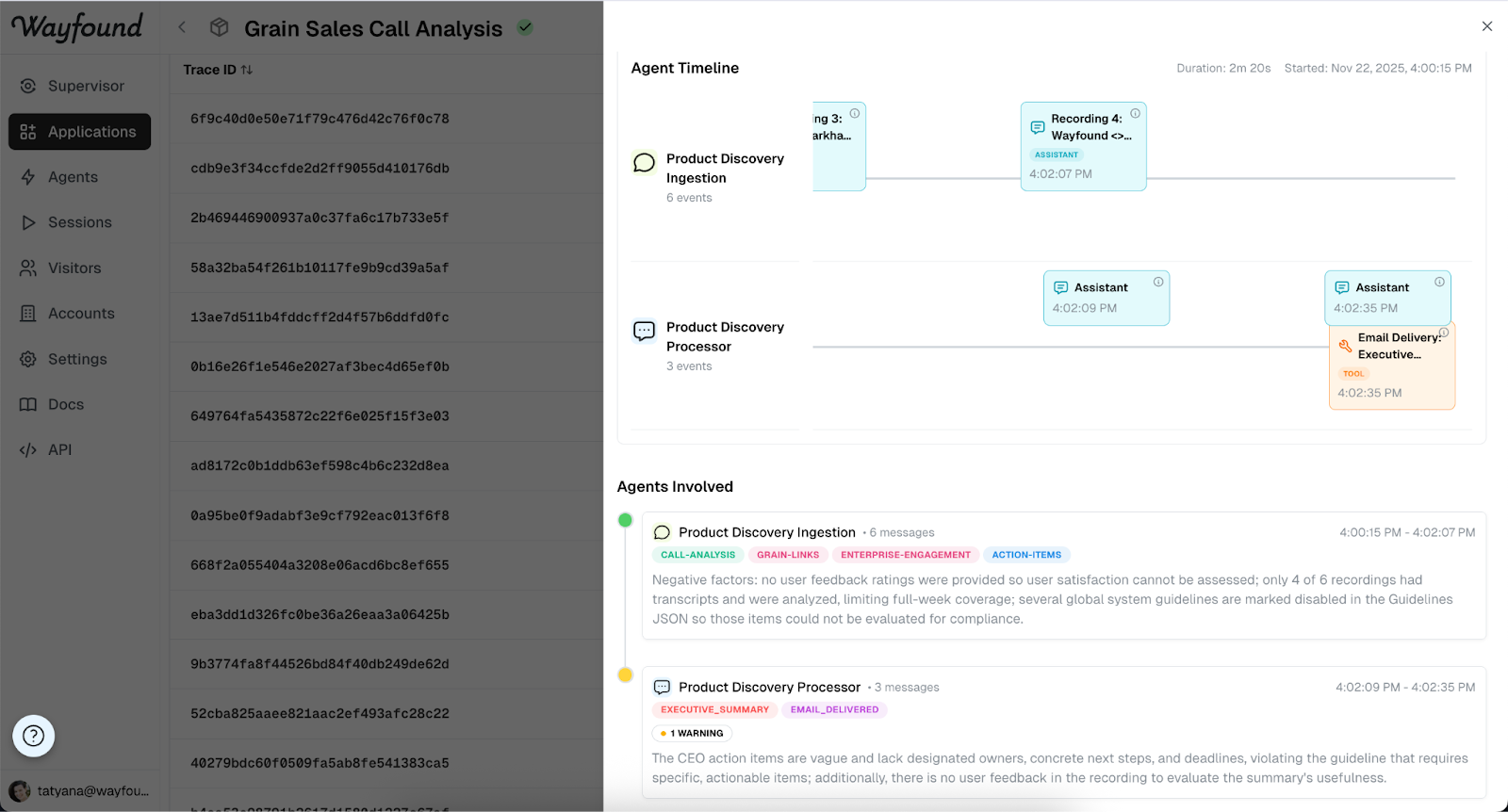

Doing this in the real world has already started; companies working with Wayfound, such as Sauce Labs, Ardmore Road Asset Management, and 531 Social, are able to capture the implicit, tacit knowledge that lives in the heads of Subject Matter Experts as well as learning from cumulative agent runs and capture that in a shared context within an AI Supervisor. That Supervisor, in turn, uses all its learned context to supervise, real-time, how AI Agents work by capturing traces, logs, and reasoning, and then close the loop to feed new instructions based on specific cultural context back to the AI Agents. The difference between Wayfound and other approaches to creating Context Graphs for AI agents include the following:

- Other Context Graph approaches rely on canonical definitions about what good work looks like (some rely on “systems of record” or “decision traces” with pre-defined logic) to exist a priori. We know, from our experience working in large and small companies, that what great work looks like exists in the heads of business users, the subject matter experts. Because this is knowledge learned from experience, it is often not written down and people know good vs bad work “when they see it,” not before.

Wayfound allows business users and non-technical subject matter experts to iteratively look at the entirety of AI Agent outputs, orchestrations, and decision traces to define what good looks like over time. We treat the challenge like training a newly-hired manager of a team on what good looks like in the context of this specific organization. As a result, we built Wayfound to enable business experts to iteratively add context, give feedback, and train the Supervisor Agent (which is the stored shared memory of all Agent actions, decisions, and human feedback on those actions and decisions).

- Context graphs or ontologies that emerge as defined in a technical architecture, become rigid and cannot adapt to an ever-changing environment. This is because context graphs rely on all possible exceptions and permutations to already exist and be prescribed into new rules; from Foundation Capital’s post: “What does this look like in practice? A renewal agent proposes a 20% discount. Policy caps renewals at 10% unless a service-impact exception is approved. The agent pulls three SEV-1 incidents from PagerDuty, an open “cancel unless fixed” escalation in Zendesk, and the prior renewal thread where a VP approved a similar exception last quarter. It routes the exception to Finance. Finance approves. The CRM ends up with one fact: “20% discount.” The new rule, 20% discount, will be applied in future instances, with future instances presumed to be “one of the same” and not new-to-the-world circumstances.

Wayfound’s architecture allows for constantly-adapting logic and decisions to emerge in light of new circumstances. What if there were no prior exceptions in any database? Or if the priors in the database do not exactly match this new circumstance at hand? What will the AI Agent do? How do the relevant humans get alerted and involved? And how does the issue get resolved? Wayfound’s Supervisor, by being architected as a high-level reasoning Agent, reasons against the shared knowledge of all AI agent interactions and human feedback on those interactions, to decide whether to alert a human or to create a feedback loop to change the system directives of the agent itself. We call this Supervised Self-Improvement for AI Agents; by creating a higher-order AI Supervisor to gather feedback, priorities, exceptions, and new circumstances that challenge or stretch the current directives of the Agent, Wayfound creates a highly-adaptive and constantly-evolving system that does not rely on rigid ontologies or rules.

- Context graphs, as they are imagined in these papers, are amorphous technical logic orchestrations that are hard to interact with, give feedback to, or understand and fix at scale. Because they are complex, abstract knowledge graphs living in highly-technical systems, the business SMEs that are the ultimate arbiters of judgement cannot access, override, or interact with them in a direct and unmediated way.

Wayfound is built around the construction of AI Agent Supervisors, which map to the known organizational hierarchy of human supervisors/managers, and allow non-technical experts to train Supervisors through natural language feedback. In our product today, we’ve abstracted these technical logic orchestrations and decision traces to make them understandable, and more importantly accessible to all the business experts in an organization, not just engineers and data scientists.

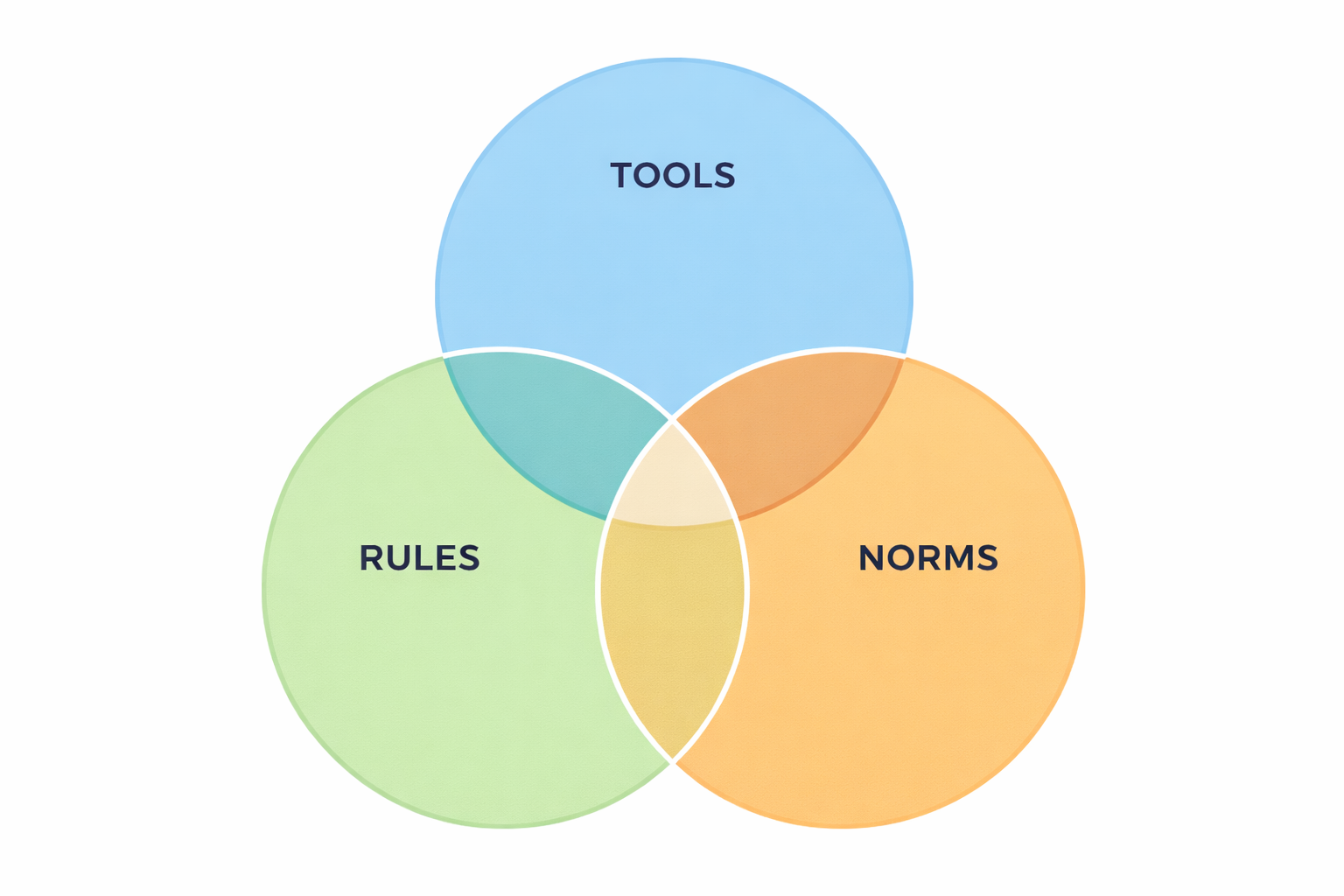

In sum, Wayfound approaches this problem not through a complex technical abstraction of Context Graphing, Ontology Building, or Heuristic shortcuts, but through a process that organizations understand and have optimized: leveraging your robust and differentiated Organizational Culture comprised of your pre-existing software systems (your tools), your company policies and brand guidelines (your rules), as well as your lived experience and tacit knowledge (your norms) – and training your workers on your specific cultural context. In this case, we apply this analogy to AI workers instead of human employees, democratizing the process to incorporate the people who know the business logic best, business users and non-technical subject-matter experts.

As Aaron Levie wrote: “These models, by default, don't know anything about your particular team or organization. In an instant, they might be asked to review a legal contract for Ford, and then in the next second be writing code for a new piece of software at Goldman Sachs. They are fully general-purpose superintelligence systems that can take on any task for anyone that’s asking. As a consequence, that means that your company is getting the same expert lawyer as another company, the same engineer, and so on. The question that we will have to wrestle with, in a world where everyone has access to the same intelligence, how does a company differentiate?

…Now, this problem has already plagued companies well before AI agents existed. Companies have always been a collection of various forms of context that they try and extract the most value out of: their processes, intellectual property, unique ideas and roadmap, ways they make decisions, information about their customers, and so on.”

Indeed, this is not a new problem, and to capture the knowledge resident across the whole organization, we must empower subject matter expert business users to train the AI systems directly in ways that are familiar to them. This approach has allowed our early customers to see profound performance improvements with their AI agents; from initial AI agents that behaved like generic idiot-savants, to AI agents that are now constantly learning about the organizational culture and organizational expectations through Supervisor agents that are sharing knowledge, learning, and memory across the entirely of AI Agent interactions and encoding that into an understanding of what good looks like for future Agent decisions. To do this effectively, we need AI Supervisors that are trained to understand and adapt to the Norms of a company’s cultural context, not just its prescribed and documented rules or the tools and systems that currently exist.

In 2010, when I was co-leading IDEO’s Organizational Design Practice, we leaned on my anthropology PhD to develop a framework for how culture forms and transforms. At its essence, cultural context develops through the interlinked and consistent formation of Rules, Tools, and Norms. Gathering examples of large-scale culture creation and transformation projects across private-sector companies as well as social movements, this framework turned out to be a robust one, and helped propel IDEO’s Organizational Design Practice to tens of millions $$ in under 18 months.

While the posts about Context Graphs and Ontologies account for the Tools (software systems) and Rules (policies and documented exceptions), what’s missing from the Context Graphs commentary so far is the accounting of how undocumented and often unspoken Norms are identified and fed into AI agents. Norms are neither captured in databases nor in traces. Norms live inside the heads of business experts and their lived experience. At Salesforce and AWS, I worked on projects to democratize software development, largely because we knew even in 2015-2018 that the real expertise lies with business users, and the act of translation to the engineering team is often coupled with a trade-off in the effectiveness of the system to the fidelity of the business’ needs and specificity to the business context.

So, the solution to this problem, made even more salient in the AI Agent era, is to present traces and decisions in highly-understandable intuitive formats to these non-technical experts to react to what the AI Supervisor is seeing in the Agents’ decisioning, actions, and behaviors against what the subject matter experts have defined in its Role, Goals, Guidelines, Context, and Feedback. This approach is the best solution we’ve seen to the actual question which Ball, Gupta, Garg and Levie identify as the biggest problem with making AI Agents effective in reality: getting the “context that lives…in people’s heads” to the AI Agents.

This is not conceptual. It’s real, and it works. Please reach out to tatyana@wayfound.ai or chad@wayfound.ai if you are ready to fill the context gaps for your AI Agents.